GSoC'24: Differentiable Logic for Interactive Systems and Generative Music

a(nother) summer retrospective

Last summer, I participated in Google Summer of Code for the first time, working with GRAME on improving support for using the Faust audio programming language on the web.

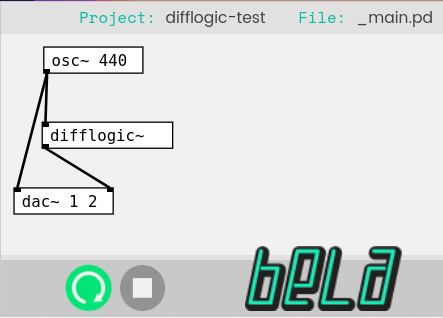

This summer, I returned for another round, this time working with BeagleBoard.org on a project that defies such easy explanation: “Differentiable Logic for Interactive Systems and Generative Music”. What does that mean? Let’s break it down.

Ingredients

The key ingredients that went into this project are difflogic, Bela, and bytebeat, which roughly correspond to “Differentiable Logic”, “Interactive Systems”, and “Generative Music”, respectively. I explain what these ingredients are and how they fit together in the intro video I created at the start of the project,

but I’ll recap briefly here.

Differentiable Logic: difflogic

In 2022, Felix Petersen, Christian Borgelt, Hilde Keuhne, and Oliver Deussen published an intriguing paper on training machine learning models built out of networks of logic gates rather than “neurons” (as in neural networks). The motivation for using logic gates is that, unlike neurons, your computing devices (e.g. the ALU) are actually built out of them. Learning a model that better to corresponds to your hardware cuts out the “middle man” of additional layers of abstraction between your task and its execution. Accordingly, Petersen et al. reported substantial gains in efficiency from using logic gates in terms of model size and inference speed. Neat!

(The challenge of using logic gate networks, compared to neural nets, is that logic gates are discrete and not differentiable; this is the main challenge addressed by Petersen et al’s work, which uses a combination of real-valued logic and relaxation to enable the use of gradient descent when learning logic gate networks.)

The paper was followed by the release of difflogic, a PyTorch-based library for differentiable logic gate networks.

Interactive Systems: Bela

Bela is the “platform for beautiful interaction”, spun out of the Augmented Instruments Lab in 2016 and crowdfunded via Kickstarter. It’s an open-source embedded platform for real-time audio and sensor processing built on the BeagleBone Black. It enables people to build highly responsive instruments, installations, interactions, and it supports a number of languages & environments for doing so.

Generative Music: bytebeat

bytebeat is a musical practice which involves writing short expressions that describe audio as a function of time, generating sound directly, sample-by-sample. For example, the expression ((t>>10)&42)*t generates the following audio:

The bytebeat approach is rather unusual compared to the conventional computer music approach, which tends to feature a rigid separation of different layers (control vs. synthesis, score vs. orchestra). Bytebeat expressions, in contrast, simultaneously determine everything — timbre, rhythm, melody, structure — from the level of individual audio samples on up.

(Representing music in the form of very compact programs has been a perennial interest of mine ever since I first encountered bytebeat several years ago.)

Goals

Take these ingredients, put them all together, and you get some interesting possibilities for the use of (title drop) differentiable logic for interactive systems and generative music!1 The overarching goal of this project is to explore and realize some of those possibilities.

The Bela is an excellent platform for building low-latency interactive applications, but as an embedded system sans hardware acceleration, it’s rather underpowered for conventional models. Difflogic offers a way to build models that can perform useful tasks more efficiently than conventional neural networks. Thus, the technical motivation for this project is taking advantage of the relative efficiency of logic gate networks to enable new machine learning applications in embedded, real-time contexts such as Bela projects.

Bytebeat expressions typically involve many bit-twiddling operations, consisting primarily of logic gates (bitwise AND, OR, XOR, NOT) and shifts; this suggests a natural affinity with difflogic, wherein models consist of networks of logic gates. This provides a creative motivation for the project: by applying the bytebeat approach (sound as pure functions of time) to logic gate networks, perhaps we can find compact representations for sounds and an interesting space for creative exploration and manipulation.

With these motivations in place, we can divide the projects goals into three areas:

-

Integration: Enable the use of logic gate networks in interactive applications on embedded systems such as the Bela and in computer music languages such as those supported on Bela. Find ways to take advantage of the BeagleBone hardware (e.g. PRUs, explained below) for better integration with difflogic.

-

Experiment: Investigate the training & usage of difflogic models for various tasks and the possibility of combining difflogic with other useful machine learning techniques and methods.

-

Application: Build applications to demonstrate the capabilities of difflogic models for interactive systems and generative music. Explore creative affinities between difflogic and existing creative practices such as bytebeat.

More detailed information about the project’s background and goals is available in the proposal.

Execution

Once again,2 all of this was a bit much for a 12-week project. For the most part, I spent the first half of the summer working on infrastructure & integration work, and the second half working on a creative application to play with sound-generating logic gate networks. The proposed machine learning experiments drew the short straw, in part for reasons described below.

Integrations

Language integration

The Bela platform supports a number of programming languages so as to give makers several options when creating their projects. These include C++, Pure Data (Pd), SuperCollider, and Csound.

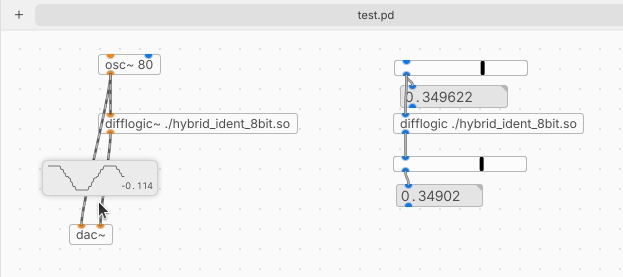

Thus, the first goal of the project was to build wrappers to enable the use of logic gate networks in these various languages, similar to what nn~ offers for neural networks.

The starting point was the built-in functionality that difflogic offers for exporting a trained model to C source code. First, I created wrappers that would build a plugin (Pd external, SuperCollider UGen, etc.) with a given network “baked in” to the compiled plugin. The baked-in approach is suitable for compiled languages such as C++ and Faust, where changes to the project already require compilation. For more dynamic languages (Pd & SuperCollider), however, this approach has drawbacks—switching models (even to a subsequent version of the same model, after further training) requires recompiling and reloading the plugin. And using multiple models requires building multiple versions of what is mostly the same plugin, giving each a different name to avoid collisions.

A more flexible approach: compile the difflogic-generated C into a shared library and dynamically load it using (on Linux or macOS) dlopen(). This is the approach taken by the most complete wrapper, a difflogic external for Pure Data, which supports loading difflogic DLLs and comes with both signal (difflogic~) and message (difflogic) versions of the object.

Further flexibility could be attained by loading a description of the network and either interpreting it (as in the interactive application described below) or perhaps using just-in-time (JIT) compilation, but this would come at the cost of performance and/or plugin complexity.

BeagleBoard integration

The language integrations described above are useful on Bela (because Bela supports those languages), but they are also usable elsewhere (e.g. Pd running on your laptop). I also explored integrations more specific to the BeagleBone Black upon which Bela is built.

In addition to the main CPU (a 1 GHz ARM Cortex-A8), the BeagleBone Black features two 200 MHz programmable real-time units. The Bela uses one PRU for its own purposes (principally low-latency I/O), but the other is free for custom use. The PRU has a rather spartan instruction set, lacking both a hardware multiplier and floating-point support. Fortunately for us, logic gate networks require neither.

Thus, I explored the possibility of running logic gate networks on a PRU in parallel to the main CPU (which runs the audio thread and other tasks). Initially, I approached this by attempting to compile difflogic-generated C using TI’s PRU compiler toolchain and integrating the generated code into the Bela example project featuring custom PRU assembly. However, in addition to being generally very hacky and unwieldy, this approach was complicated by the fact that Bela uses the uio_pruss driver to interface with PRUs, whereas it seems TI has moved on to remoteproc (and taken their toolchains along for the ride).3

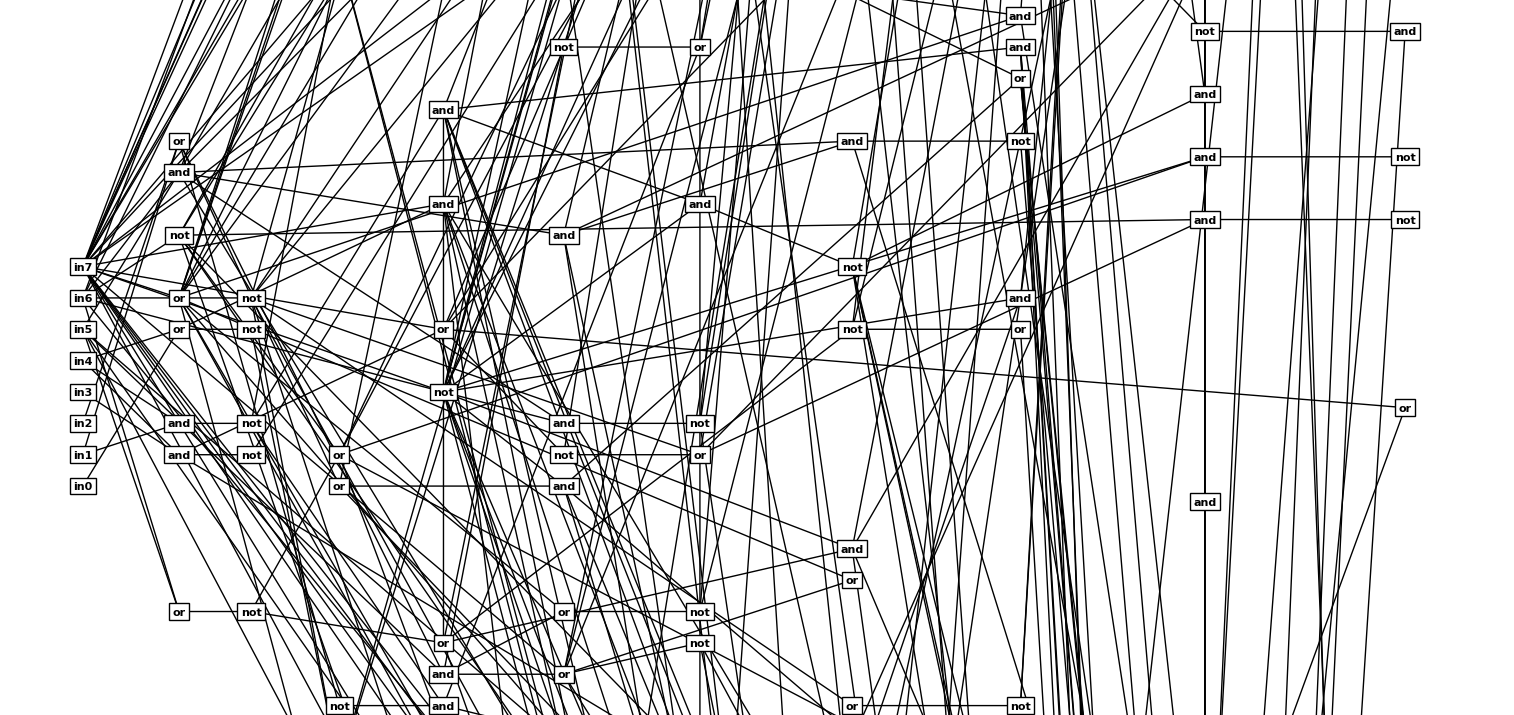

Rather than go further down this rabbit hole, I decided to change tacks and generate PRU assembly directly, without going through a C compiler. In addition to getting my hands dirty with the PRU’s instruction set, this involved taking on some of the tasks that a C compiler would (hopefully) do for us. I ultimately used the NetworkX library to pre-process and optimize the difflogic network before finally generating PRU assembly.

Experiments

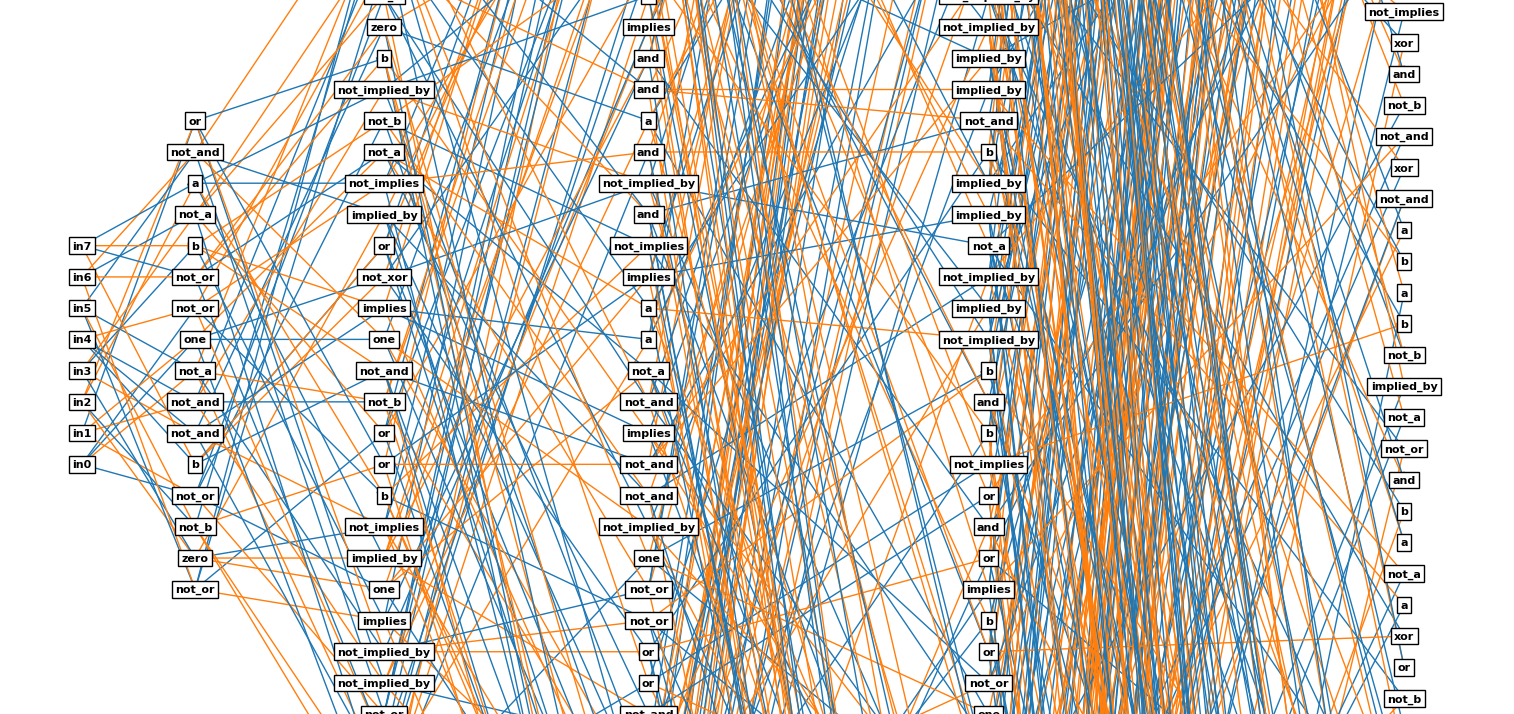

In order to build the wrappers & integrations described in the previous section, I first needed some logic gate networks to work with. Thus, I began by training some small and fairly trivial networks, learning a bit about difflogic along the way.

Compared to neural networks (which use floats throughout), we face additional questions about representation when working with logic gate networks, which deal only in bits. How should we encode real values (such as audio samples) at the input & output of a logic gate network? An obvious approach is to use the same representation that your computer ordinarily does: positional binary encoding (i.e. 0b1011 = 2**3 + 2**1 + 2**0 = 11), followed by rescaling/offsetting as needed. However, in their work, Petersen et al instead use GroupSum, which sums groups of bits at the output of the network.

Intuitively, using GroupSum (and correspondingly thermometer encoding for inputs) has the effect of smoothing out the basic binary weirdness of logic gate networks in exchange for increased redundancy. Representing 256 different values, for example, takes 255 output bits instead of the 8 that it takes for positional encoding.

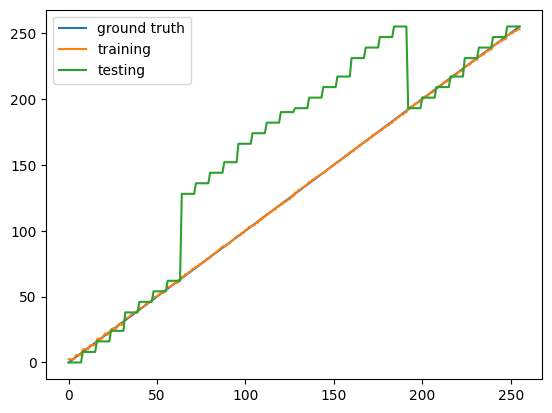

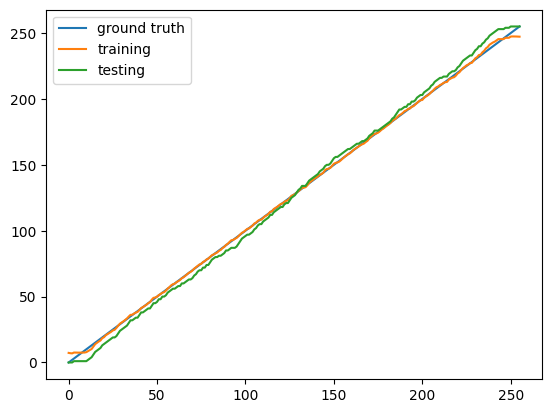

In my small-scale experiments, I found markedly different behavior when using networks with binary positional encoding versus GroupSum (unary) encoding. Both kinds of networks could learn functions, but the binary representation was much more strongly affected by discretization, losing more accuracy when switching from training mode to inference mode than a GroupSum network of comparable size and exhibiting “jagged” behavior. The following plots demonstrate this with tiny networks trained to learn the identity function.

Binary (positional) encoding:

Unary (thermometer & GroupSum) encoding:

Input/output representation issues also complicated my plans to try combining difflogic with other ML techniques, such as differentiable digital signal processing (DDSP). The problem is that the conversion between floating point scalars and binary vectors (either in positional representation or unary encoding) is only differentiable one-way. For instance, if we have a vector of bits [a b c d] positionally encoding a scalar, we can compute the corresponding scalar as 1*a + 2*b + 4*c + 8*d, which we can easily differentiate with respect to each variable. Going the other way, from a scalar to a bit vector, we need to “split” the value into several discrete slots, and it’s not obvious how to go about this in a differentiable way. This question is moot when we’re feeding our data directly into a logic gate network; we can just convert the values beforehand. But if we’re trying to train our logic gate network as part of a larger hybrid model, where the logic layers may follow neural layers, we have an open problem.

As this seemed like a research project in its own right, and GSoC is oriented towards functional outputs, further ML experiments were mostly sidelined in favor of the more concrete work in the other parts of the project.

Applications

Finally, I wanted to build some concrete applications putting logic gate networks to work. I ultimately focused on a specific creative application inspired by conceptual affinities between logic gate networks and bytebeat expressions.

As mentioned previously, bytebeat expressions encode music in the form of expressions that compute audio samples directly as a function of time (sample index). I took the same approach with logic gate networks, treating them as sample-generating functions of time.

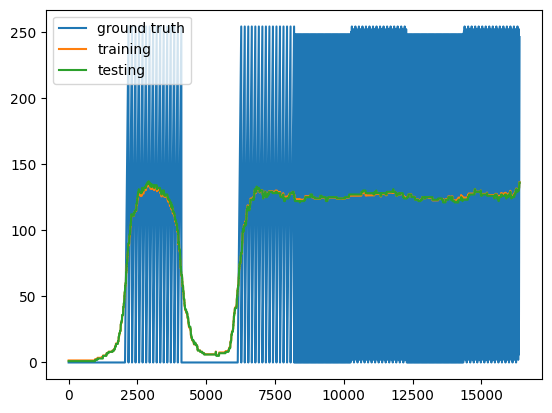

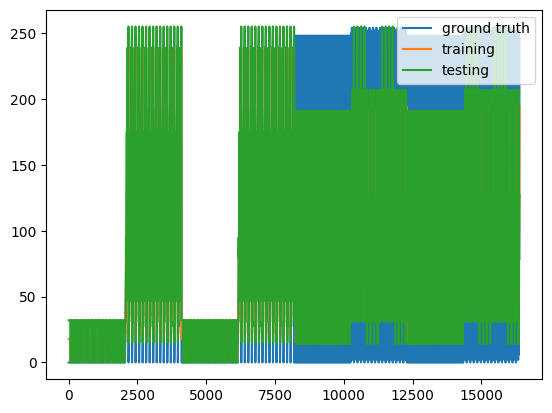

Initially, I tried training some difflogic networks on the output of bytebeat expressions. Related to the encoding issues described above, I found that GroupSum had a strong smoothing effect on the learned signal, with the result that the network output sounded like quiet noise. Subsequently, I decided to instead embrace the weirdness of raw (positional) bits and work with GroupSum-free synthesis networks!

Example: original audio for expression ((t >> 10) & 42) * t (first two seconds)

Output of unary (thermometer & GroupSum) network:

Output of binary (positional) network:

With this approach, I found that difflogic was able to train networks that did a reasonable job of imitating bytebeat expressions (even with a naïve loss function and without the benefit of +, *, etc.). What’s more, soon I found that even totally random (untrained) logic gate networks often sounded surprisingly interesting. This observation led me to focus less on sound reconstruction and more on a creating a compelling interaction for manipulating sound-generating logic gate networks.

To that end, I built real-time visualization for synthesis networks. The visual feedback immediately suggested some intuition for why small logic gate networks, featuring simple positional binary encoding, might tend to sound “musical”.

To elaborate, the input to the network is a sample counter. This counter functions as a binary clock, and its size in bits determines the overall periodicity of the network (how much audio it can generate before repeating). For example, in a network with 16 bits of input generating audio at a sample rate of 8kHz, the input will overflow and repeat itself every 2**16 / 8000 = 8.192 seconds. But crucially, each bit of input loops at a different period. The least-significant bit flips between 1 and 0 every single sample, with a period of just 250ųs (or a frequency of 4kHz). This period doubles for the next most-significant bit, and so on up to the most-significant bit, which has a period of 8.192 seconds.

The rest of the network is just logic gates applied to these inputs, with the result that every gate’s output is some combination (in the sense of interference patterns) of these power-of-two periodicities. The entire network is biased towards subdivisions of powers of two.4 Because the network generates audio at the sample level, simultaneously determining every level of musical hierarchy (from timbre to form), this effect is pervasive.

Following network visualization, I worked on network manipulation. I designed an interaction inspired by circuit bending practices allowing the player to perform “brain surgery” on a live running network by masking out various gates, temporarily replacing them with constant values. In order to make this responsive, I built a simple network interpreter so that the network could be modified directly in memory (rather than re-exporting the network to C, recompiling that C, and then reloading the compiled network).

The final application can run both on the Bela (using the Trill Square sensor for the network bending interaction) and in the browser (for easy demoing and greater flexibility of configuration). The browser version runs the network interpreter as a WebAssembly module in an Audio Worklet.5

Just for fun, I embedded a synthesis network into a ScoreCard, and I hope to incorporate these into my workshop next week (‼️🔜‼️) at NIME!

Future Work

My work on this project leaves several loose ends to follow. Indeed, there’s plenty of room to continue exploring both technically and creatively.

From the original plan, the least-developed part of the project is experimentation with ML. Given more time, I’d like to train some networks to do typical tasks (à la “model zoos”) to serve as examples of the utility of logic gate networks and for comparison with similarly-accurate neural networks. It would also be interesting try replicating some existing techniques (DDSP, variational autoencoders, even just convolutional networks) with logic gate networks.

As for integrations, future work could include unifying the wrappers with the PRU work so that the wrappers can run models in parallel with the audio thread on Bela. It would also be nice to further simplify the workflow of using a network for inference on Bela after training it on another (more powerful) machine.

My work with synthesis networks in the creative application has raised numerous questions. While I would still like to explore the possibility of logic gate networks for sound reconstruction (a direction which probably involves larger networks, GroupSum, and MFCCs), I find myself captivated by the sounds of tiny, binary-encoding networks. It could prove illuminating to go beyond intuition and take a more analytical approach in considering the inductive/musical biases of such networks. I am also curious about the potential of tiny logic networks as compact representations of existing musical content (either at the audio level or symbolic level).

Overall, the project could use a bit more polish; TODOs abound. The pace of the summer didn’t leave much time for tying up one area of work before moving on to the next. I hope to find some time to tidy up and make the project more welcoming in the coming weeks (probably after NIME!).

Conclusion

I’ve enjoyed getting my hands dirty with difflogic and Bela this summer, and I look forward to doing more work with both in the future. Lookng ahead, I hope to see others use and take inspiration from the work I’ve completed this summer.

To continue a tradition, here are a few remarks/bits of advice from my GSoC experience:

- Different host organizations have different expectations for contributions (in terms of proposal format, formal structure, communication methods, etc.).

- Scoping is (still) hard! Compared with my previous GSoC experience, I had less to do in terms of getting acquainted with existing codebases and old work, but a wider project scope overall.

- Now that I have an impressive sample size of two, I can conclude with certainty that GSoC is cursed: the end of the summer will always end up being more hectic than you expect, precisely when you need to focus on wrapping (and writing) things up.

- Demo videos are great! Do more of those.

Thanks to:

- Google for sponsoring another Summer of Code,

- BeagleBoard.org for hosting this funky project, providing hardware, and wrangling GSoC contributors,

- Bela for chipping in with additional hardware,

- my advisor, Jason Freeman, for his support and flexibility,

- my mentors, Jack Armitage and Chris Kiefer, for their support and many engaging conversations,

- their respective labs, the Intelligent Instruments Lab and Emute Lab, for supporting their participation as mentors,

- and Felix Petersen for meeting with us and indulging our questions!

Finally, here are some links for this project:

-

Okay, yes, the project title is kind of a mouthful. “Better Faust on the Web” was definitely snappier. ↩

-

scoping is hard! ↩

-

See this thread for slightly more information. ↩

-

Perhaps some kind of ternary logic network would be biased towards triplets and triple meters? ↩

-

thus satisfying the cosmic rule that all of my projects must inevitably involve wasm or worklets (usually both) somehow ↩